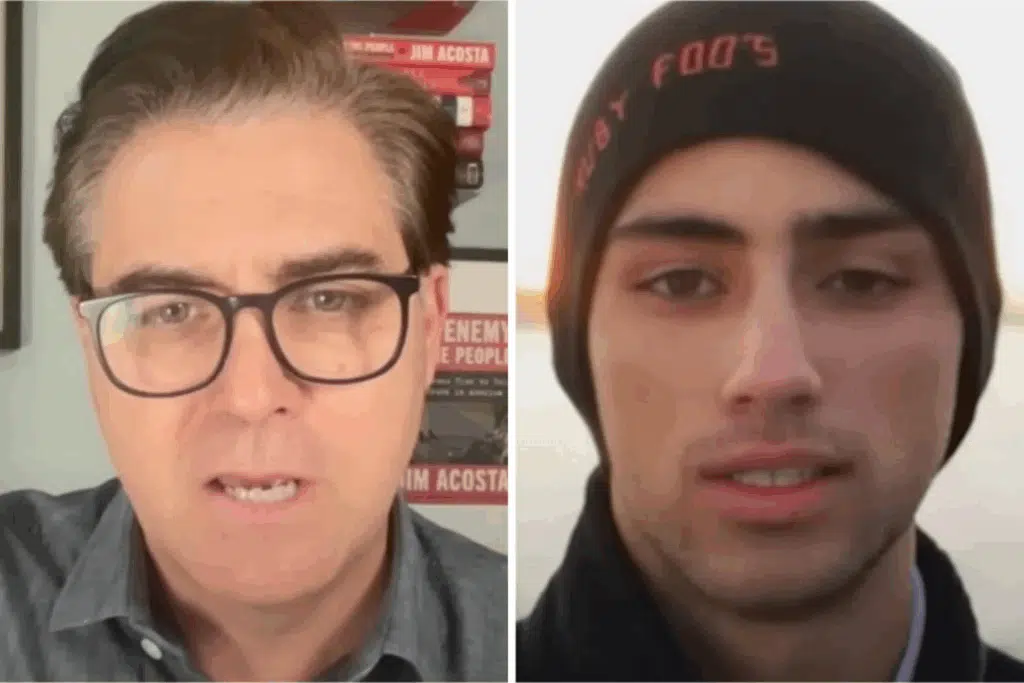

Jim Acosta Sets A New Low With Deranged AI Interview Of Dead Student

Jim Acosta, the self-promoting former anchor who even CNN got sick of, has set a new low in his most recent attempt at relevancy. Acosta has deteriorated from a lying clown and moronic narcissist to an embarrassingly morbid, washed-up has-been. His most recent descent into absurdity is so outrageous that if anyone ever

Again pays attention to anything he says, they should seriously consider therapy.

Acosta, like anyone who wants to conduct an interview to prove a point about a personal belief, chooses a person to interview who shares the same view on that subject. For example, if you want to host an interview on climate change, you would bring on someone as paranoid as yourself to justify the delusion that you both are under.

Acosta believes in gun control. So, he wanted to interview someone who shared that opinion. However, what Acosta chose to do could seriously become a Twilight Zone episode. Instead of looking for another talking head to parrot his preplanned responses, this ghoul decided to preprogram an AI version of a dead student, Joaquin Oliver, who was killed in the Parkland high school shooting in 2018.

We always knew that Acosta had no ethics of any kind, but this reeks of gruesome desperation. The conversation featured Acosta speaking with an animated image of Joaquin Oliver, who would have been celebrating his 25th birthday on Monday. According to Joaquin’s father, Manuel, the avatar was based on a real photograph of his late son and was created using general information and insights from his writings and social media posts.

At the beginning of the interview posted on Acosta’s Substack page, the AI named Oliver is asked about what happened to him. In response, the model answers in a robotic monotone. Furthermore, in addition to the computerized sound of the avatar’s voice, the movements of its face and lips appear jerky and erratic.

“Oliver,” the mirage responded:

“I appreciate your curiosity. I was taken from this world too soon due to gun violence while at school. It’s important to talk about these issues so we can create a safer future for everyone.”

The mirage then went on to pontificate about gun control:

“A mix of stronger gun control laws, mental health support, and community engagement. We need to create safe spaces for conversations and connections, making everyone feel seen and heard. It’s about building a culture of kindness and understanding.”

The deranged Acosta then proceeded to discuss more casual topics with an illusion that doesn’t exist—asking the mirage about movies and even the NBA.

After the interview, Acosta brought on Oliver’s father to discuss his decision to use an artificially generated version of his son and whether he planned to continue to do so going forward.

“I understand that this is AI,” he said. “I don’t want anyone to think that I am in, some way, trying to bring my son back. Sadly, I can’t, right? I wish I could. However, the technology is out there.”

Since his son was killed, Manuel has become an outspoken gun control advocate. I personally disagree with this, but I can certainly understand his pain. What he said next, though, I find extraordinarily strange and creepy concerning the AI mirage of his son.

“We can just raise the voices. Now, Joaquin is going to start having followers… he’s going to start uploading videos. This is just the beginning.”

He then clarified that another reason he and his wife, Patricia, created the AI version of Joaquin was to hear their son’s voice again. This is when it gets even creepier.

“Patricia will spend hours asking questions. Like any other mother, she loves to hear Joaquin saying, ‘I love you, mommy.’ And that’s important.”

Acosta then placated Manuel, saying, “I really felt like I was speaking with Joaquin. It’s just a beautiful thing.”

Naturally, the outrage was palpable on social media. Reason’s Robby Soave posted, “This is so insane and evil. It should never be done. I’m speechless.”

Acosta responded with a video of Manuel pushing back on the criticism over the interview. “Joaquin, known as Guac, should be 25 years old today. His father approached me to do the story… to keep the memory of his son alive.”

“Today he should be turning 25 years old, and my wife Patricia and myself – we asked our friend Jim Acosta to… have an interview with our son because now, thanks to AI, we can bring him back,” Manuel said in the social media video response.

“It was our idea, it was our plan, and it’s still our plan. We feel that Joaquin has a lot of things to say, and as long as we have an option that allows us to bring that to you and to everyone, we will use it.”

“Stop blaming people about where he’s coming from, or blaming Jim about what he was able to do. If the problem you have is with the AI, then you have the wrong problem.”

Manuel finished by saying that the focus should remain on mass shootings.

“The real problem is that my son was shot eight years ago. So if you believe that that is not the problem, you are part of the problem.”

When faced with criticism on social media regarding the interview, Acosta told The Independent that he would essentially echo much of what Manuel said about the backlash in his video.

“I think I would say this – the family of Joaquin reached out to me to see if I would help them remember their son. My heart goes out to them, and I was honored to help them in this moment. I think Joaquin‘s father makes a good point that if you’re bothered more by this than the gun violence that took his son, then there is something truly wrong with our society.”

Acosta’s conversation isn’t the first time that victims of the Parkland shooting have been brought back via AI. Oliver, along with the disembodied voices of several other students and staff who were killed in the massacre, was used in a robocalling campaign for gun control last year called The Shot line.

“Six years ago, I was a senior at Parkland. Many students and teachers were murdered on Valentine’s Day that year by a person using an AR-15 assault rifle. It’s been six years, and you’ve done nothing. Not a thing to stop all the shootings that have continued to happen since.”

“I’m back today because my parents used AI to re-create my voice to call you. How many calls will it take for you to care? How many dead voices will you hear before you finally listen?”

This is unmitigated madness. Not unlike radical leftist teachers, who take advantage of innocent children who see them as authority figures in the classroom, Acosta took advantage of this grieving family. Instead of doing the right thing and ending the delusion that their son can speak again, he enabled them and further enhanced their derangement.

In another instance of AI derangement, a liberal judge allowed the mirage of a man who was killed in a road rage incident to speak during the trial. Not unlike Oliver’s parents, the video was prepared by the family after the victim was dead.

“I believe in forgiveness, and a God who forgives. I always have and I still do.”

After listening to the video, the wacked-out judge said this:

“I loved that AI, thank you for that. As angry as you are, as justifiably angry as the family is, I heard the forgiveness,” the judge said. “I feel that that was genuine.”

Who is the judge talking to?

The victim is dead.

What happened to second-hand information not being allowed?

This is not only not second-hand information, this is totally FABRICATED information. I’m willing to bet that the perpetrator in this case got a lighter sentence because the judge was touched by a disembodied voice that belongs to no one.

One of the darker aspects of AI has emerged, raising serious concerns. If fake voices are permitted to produce fabricated dialogues that can influence juries and sway political opinions, it will demonstrate how far we have fallen as a society.

Acosta was nobody when he was lying to the living; now he’s interviewing the dead, hoping to keep his own dying career alive.